Quality Assurance

From development to scale

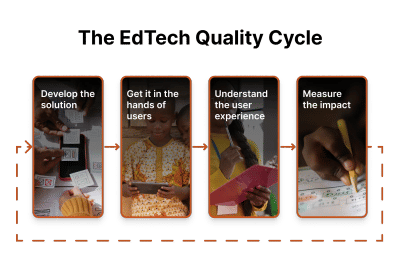

Our framework provides an overview of how to assess the quality of AI EdTech through its lifecycle.

From idea to development and deployment at scale, high-quality AI education products need to go through different stages of quality checks to become evidence-informed and show efficiency gains or impact on education outcomes.

Introduction

Many AI EdTech solutions lack robust evidence on their impact on educational outcomes, and while there are multiple contributing factors to this, the two major challenges are the duration and cost of traditional impact evaluations (RCTs), which discourage early-stage developers from generating evidence of their solutions' effectiveness.

Moreover, waiting 12 to 18 months for findings is no longer compatible with the fast-changing environment of AI solutions, where new models are released regularly.

While gold-standard RCTs are irreplaceable for exploring long-term causal effects, the evidence generation journey needs a rethink. It should not be a single, final step, but an iterative cycle that spans all stages of development and deployment.

Our Quality Assurance framework presents a cyclical model that can be adapted at each stage and with any budget – from lab testing to piloting and then scaling the innovation across hundreds of schools.

The EdTech Quality Cycle

The EdTech Quality Cycle

An evidence-informed

product development cycle.

Develop the solution

Building a robust AI EdTech solution begins with strong foundational work:

Pedagogical foundations

Start by defining a clear, evidence-informed Theory of Change (ToC). This should map the causal pathways from activities and outputs to desired learning outcomes and long-term impact, and should ensure that the product’s pedagogical approach aligns with the latest science of learning, and that the content is both locally contextualised and of high quality.

Safety and ethics

It is fundamental to design products that inflict no negative implications on users, particularly students. Additionally, being compliant with local data regulations from the start is a best practice that ensures all data is collected, stored and processed legally.

When developing your product, its underlying AI models should be rigorously tested. This involves using education-specific benchmarks and evaluating generated outputs against expert human responses, tweaking accordingly to make sure the AI solutions behave as intended.

Get it in the hands of users

Getting a solution to real users is a crucial step that begins with a well-defined strategy from the outset. This phase of the framework adapts to the current development stage, from recruiting a small group of alpha/beta testers for early feature testing to planning for large-scale randomised controlled trials.

Our framework focuses on three key aspects:

Access

Defining the optimal path to users includes identifying target segments, surveying local infrastructure and device access, and then aligning the product's cost with user affordability.

Implementation

Once the users have access, it is vital to understand how they are using the product by evaluating the fidelity of implementation. And what supervision or support is needed to optimise effective product use.

Product uptake

Before measuring specific user outcomes, it is important to understand initial user journeys on the platform. This uncovers any roadblocks to successful onboarding and adoption. These steps remain equally relevant even after deployment at scale, as even minor feature changes can significantly impact uptake.

Understand the user experience

Once users are on the platform, we should go beyond uptake metrics to understand the quality of their interaction. This is a two-part process:

Experience and perceptions

First, assess users' subjective experiences - do they find the product intuitive and easy to use? User satisfaction can be measured through established methods like the Net Promoter Score (NPS) using in-app surveys. This can be used to drive product design and to showcase how users are perceiving the product.

Engagement: analysing usage data

Is time on the platform translating into meaningful interaction as defined by the product's ToC? Are users engaging as intended, or dropping off at specific points? What separates power users from the rest? Checking this now can ensure more formal evaluation later on are not impeded by poor user engagement.

Measure the impact

As the product is refined and users are onboarded and meaningfully engaged, we can then plan to measure its educational impact. This is the validation of the solution's impact that most stakeholders, such as policymakers, school district leaders and funders look for. Our framework addresses it in three distinct stages:

Intermediate user changes

Impact is more than just test scores. Measure cognitive, affective (emotional), and behavioural changes in users. How does student cognitive engagement change? Do teachers feel more confident in integrating technology in their teaching? Do students feel joy while learning through the app?

Impact (on outcomes of interest)

To measure learning outcomes, design an experiment, preferably randomised to confidently claim causation. This can range from traditional, more expensive longitudinal RCTs to rapid-cycle evaluations of six to eight weeks.

Cost-effectiveness

To plan for future scale-up, a detailed account of actual intervention costs is essential. By separating fixed and variable costs, we can calculate the true impact per dollar spent, providing a clear measure of the solution's value and return on investment for governments and funders.